Adventures in Machine Learning

Once upon a time we would build or upgrade our own PCs from components. Typically we'd buy a prebuilt computer, but over the subsequent years we'd keep upgrading components as the technology developed, until all that was left of the original machine was the case. But about 10 years ago the rate of progress in hardware seemed to slow down and there was less and less reason to get out the screwdrivers and pliers. Then came the cloud, and now all you need is a decent laptop computer, with all the interesting hardware existing in some Azure datacentre located who-knows-where. I have to admit that for all the advantages of cloud computing, I do miss the satisfaction of building physical hardware.

One area where people do still build computers is for computer games. This has provided a market for specialised hardware, particularly graphics cards that are optimised for dealing with high performance matrix operations. I have no interest whatsoever in computer games, but these graphics cards can be put to a more interesting use: machine learning. So we decided, somewhat on a whim, to buy a high-end GPU card for the purposes of doing some accelerated machine learning.

Now of course you don't need to buy a machine to do machine learning, you can just use Azure. But typically ML means very long runs on a powerful machine, and that works out to be quite expensive. For example, at the time or writing, an Azure NV3 GPU VM is currently on offer, but it's still about £25 a day. So if you need to do more than a couple of months worth of computing, building your own machine becomes attractive.

Libraries like PyTorch and TensorFlow have drivers for the Nvidia CUDA (Compute Unified Device Architecture) API, so it makes sense to buy a card using Nvidia GPUs that support CUDA. There is a wide range of these chips at very different price points, but currently the best 'bang for the buck' looks like the Nvidia GeForce 2060. This refers to the graphics chip; the actual GPU card is made by a number of manufacturers. Ours is made by MSI and cost about £370 and is sold as a "Gaming Z" device, whatever that means.

Actually, what it means is that it has multi-coloured LEDs that glow and pulse. You might think this would indicate what the GPU was doing in some way, but in fact the lights seem to perform no function whatsoever. If you can survive without the lightshow, you can get a slightly cheaper graphics card, but this particular one happened to be available for quick delivery, and the underlying electronics is the same. The card also has one HDMI output and no less than three DisplayPort outputs, which I presume is to support multiple monitors for people playing computer games. I am guessing that pretending you are a soldier, or a gangster who drives fast cars, is more effective the more screens you have. Whatever the reason for them being there, we won't be using them.

(Re-) Building a Computer

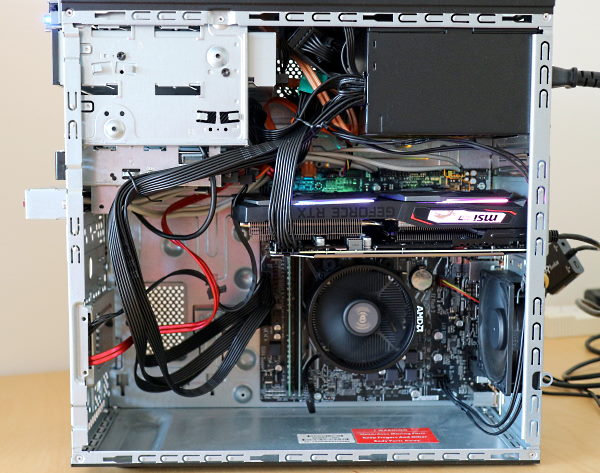

From the half-dozen old PCs and servers lying around unused, we decided to use an old Compaq Presario from about 2008 with an AMD processor. It had a PCIe 16x card slot which, on the face of it, looked as though it ought to work. We also ordered a new power supply which included the six/eight-pin extra power lead required by these high-end graphics cards (they can draw up to 160 watts, which is too much for the motherboard to supply through the expansion socket). We then spent about a day trying to get the system to recognise the graphics card, while also learning about the CUDA API. We concluded that the motherboard was incompatible in some way and, not wanting to spend another day working out precisely why a 2008 PCIe x16 socket was subtly incompatible with a 2020 PCIe x16 device, we simply ordered a new motherboard. We chose an ASUS board with an AMD Ryzen processor and 8Mb of memory pre-installed, which we further upgraded to 20 Mb by replacing one of the two memory banks. Naturally, these were DDR4, which is incompatible with the dozens of spare DDR3 memory modules we have accumulated. Motherboard and processor were bought as a bundle for about £270, with an additional £50 on memory and £50 for the new PSU - total spend: £740.

We first tested the motherboard, power supply and GPU "on the bench" and verified that we could recognise the GPU both from Windows and Linux. Windows did a great job of just detecting the new hardware and installing drivers for it. The Nvidia Windows drivers on the MSI site, on the other hand, seemed to require you to spend an hour downloading and installing all manner of utilities which would then tell you bluntly that they were "not compatible" without much further detail. It would have been nice to have known that before we invested an hour installing them. The Linux driver installation seemed to be similarly fraught and again seemed to involve a lot of trial and error and visits to Stack Overflow and the like.

Testing, testing

When we finally got it all working it was time to do a bit of testing. I wrote a very simple matrix multiplication loop in Python, and then tested the performance on my Surface Pro 6 (which has an i5 processor), on an Azure NV6 VM, the rebuilt computer with CUDA disabled, and finally with CUDA enabled. In order to test on a more typical ML problem, I also did a similar series of tests using the ResNet-152 computer vision model (which won the 2015 ImageNet Large Scale Visual Recognition Challenge) and allowed it to perform a number of epochs of its training algorithm using the PyTorch implementation.

Matrix multiplication is really something that a graphics card is optimised for, so one would expect good performance. In fact it was 33 times faster with the GPU than without, and that is probably approaching some kind of upper limit. Using it to train the ResNet model wasn't quite as impressive, but still gave quite a good speed improvement of about 18. It is quite possible that newer models have been optimised to use a GPU. The fact that the model is almost 18 times faster than even a very good CPU makes a big difference as it means you can perform 18 times as many experiments in the same time.

This was already an impressive speed improvement, but was even more impressive when compared to the same tests using a Microsoft Surface 6 with an Intel i5 processor, which is my day-to-day machine that I use most of the time. Obviously this is going to be slower, especially as its design is optimised to be a highly portable device. When I did the matrix multiplication test on the Surface it took nearly five minutes (actually 270 s). This means that the difference between running these models on my machine and running it on the re-born Compaq Presario with graphics card is a factor of 100. So testing a model that would take a whole day to train on my laptop could be done in a few minutes.

I also tried provisioning an Azure VM optimised for machine learning, which at the time of writing was on offer, as mentioned above. This was actually the NV6, which was the most powerful configuration I could find, and includes a Tesla M60 graphics accelerator. This is a more expensive version of the Nvidia GeForce-based cards but packaged and marketed for server applications, and nothing to do with the electric car company (a fact that has confused a lot of people, including conference speakers I have seen, to the point that Nvidia have now renamed this product line as 'Ampere', after another electrical engineering pioneer). I suspect it is electronically very similar, if not identical, to the equivalent consumer graphics cards based on the GeForce 2080 (a more powerful version of the card we installed in our machine). When I carried out the same tests on the Azure VM it was slightly slower using just the CPU (which shows how good our motherboard and CPU are), and slightly better when using the Tesla GPU.

Results

| Compute Resource | Matrix Multiplication | ResNet-152 |

|---|---|---|

| Surface Pro i5 | 270s | 2020s |

| Reborn Compaq CPU | 91s | 780s |

| Reborn Compaq GPU | 2.7s | 44s |

| Azure VM - NV6 | 112s | 801s |

| Azure NV6 Tesla M60 | 2.6s | 42s |

Our hardware spend of £740 (a little under $1000) got us a machine that was similar to a top-end Azure VM which would be between £50-100/day to rent, but we also spent a lot of time getting it all working. Unless you think you are going to have a machine running continuously for a month or more, I would recommend using a VM from a cloud provider like Microsoft Azure. If you think you are going to be training models pretty much continuously, then building a machine looks very cost-effective. If you don't want to build (and to my mind that's half the fun) you could also buy a high-end computer games machine. These are a bit more expensive and you might have to put up with a very silly enclosure, but it avoids the time-consuming process of making sure all the components are compatible, as well as assembly.

There's no denying that there is something rather satisfying about taking an old computer and turning it into a supercomputer, with or without the multi-coloured LEDs. It also means you can leave it running without worrying about racking up an unexpected bill from a cloud provider. But it's a good idea to work out exactly what you need it for, before you start on this journey. It can be more expensive and time-consuming than you expect, especially when compared to a few clicks in the Azure portal.